James Murray is principal of Seattle IT Edge, a strategic consultancy that melds the technology of IT with the business issues that drive IT solutions. When James gave me a list of things that are central for IT professionals, I thought it might be fun (and hopefully useful) to connect these terms with online surveys for market research.

[Warning: if you are a technical type interested in surveys, you might find this interesting. But if you aren’t in that category, I won’t be offended if you stop reading.]

Scalability

The obvious interpretation of scalability for IT applies to online surveys too. Make sure the survey tool you use is capable of handling the current and predicted usage for your online surveys.

- If you use an SaaS service, such as SurveyGizmo or QuestionPro, does your subscription level allow you to collect enough completed surveys? This isn’t likely to be an issue if you host your own surveys (perhaps with an open-source tool like Lime Survey) as you’ll have your own database.

- Do you have enough bandwidth to deliver the survey pages, including any images, audio or video that you need? Bandwidth may be more of an issue with self-hosted surveys. Bandwidth might fit more into availability, but in any case think about how your needs may change and whether that would impact your choice of tools.

- How many invitations can you send out? This applies when you use a list (perhaps a customer list or CRM database), but isn’t going to matter when you use an online panel or other invitation method. There are benefits to sending invitations through a survey service (including easy tracking for reminders), but there may be a limit on the number of invitations you can send out per month, depending on your subscription level. You can use a separate mailing service (iContact for example), and some are closely integrated with the survey tool. Perhaps the owner of the customer list wants to send out the invitations, in which case the volume is their concern but you’ll have to worry about integration. Most market researchers should be concentrating on the survey, so setting up their own mail server isn’t the right approach; leave it to the specialists to worry about blacklisting and SPF records.

- Do you have enough staff (in your company or your vendors) to build and support your surveys? That’s one reason why 5 Circles Research uses survey services for most of our work. Dedicated (in both senses) support teams make sure we can deliver on time, and we know that they’ll increase staff as needed.

Perhaps it’s a stretch, but I’d also like to mention scales for research. Should you use a 5-point, 7-point, 10-point or 11-point scale? Are the scales fully anchored (definitely disagree, somewhat disagree, neutral, somewhat agree, definitely agree)? Or do you just anchor the end points? IT professionals are numbers oriented, so this is just a reminder to consider your scales. There is plenty of literature on the topic, but few definitive answers.

Usability

Usability is a hot topic for online surveys right now. Researchers agree that making surveys clear and engaging is beneficial to gathering good data that supports high quality insights. However, there isn’t all that much agreement on some of the newer approaches. This is a huge area, so here are just a few points for consideration:

- Shorter surveys are (almost) always better. The longer a survey takes, the less likely it is to yield good results. People drop out before the end or give less thoughtful responses (lie?) just to get through the survey. The only reason for the “almost” qualifier is that sometimes survey administrators send out multiple surveys because they didn’t include some key questions originally. But the reverse is the problem in most cases. Often the survey is overloaded with extra questions that aren’t relevant to the study.

- Be respectful of the survey taker. Explain what the survey is all about, and why they are helping you. Tell them how long it will take – really! Give them context for where they are, both in the form of textual cues, and also if possible with progress bars (but watch out for confusing progress bars that don’t really reflect reality). Use survey logic and piping to simplify and shorten the survey; if someone says they aren’t using Windows, they probably shouldn’t see questions about System Restore.

- Take enough time to develop and test questions that are appropriate for the audience and the topic. This isn’t just a matter of using survey logic, but writing the questionnaire correctly in the first place. Although online survey data collection is faster than telephone, it takes longer to develop the questionnaire and test.

- Gamification of surveys is much talked about, but not usually done well. For a practical, business-oriented survey taker, questions that aren’t as straightforward may be a deterrent. On the other hand, a gaming audience may greatly appreciate a survey that appears more attuned to them. Beyond the scope of this article, some research is being conducted within games themselves.

Reliability

One aspect of reliability is uptime of the server hosting the survey tool. Perhaps more relevant to survey research are matters related to survey and questionnaire design:

- Representativeness of the sample within the target population is important for quality results, but the target depends on the purpose of the research. If you want to find out if a new version of your product will appeal to a new set of prospects, you can’t just survey customers. An online panel sample is generally regarded as representative of the market.

- How you invite people to take the survey also affects how representative the sample is. Self selection bias is a common issue; an invitation posted on the website is unlikely to work well for a general survey, but may have some value if you just need to hear from those with problems. Survey invitations via email are generally more representative, but poor writing can destroy the benefit.

- As well as who you include and how you invite them, the number of participants is important. Assuming other requirements are met, a sample of 400 yields results that are within ±5% at 95% reliability. The confidence interval (±5%) means that the results from the sample will be within that range of the true population’s results. For the numerically oriented, that’s a worst case number, true for a midpoint response; statistical testing takes this into account. The reliability number (95%) means that the results will conform 19 out of 20 times. You can play with the sample size, or accept different levels of confidence and reliability. For example, a business survey may use a sample of 200 (for cost reasons) that yields results that are within ±7% at 95% reliability.

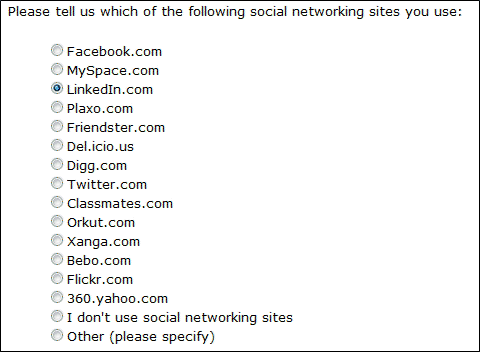

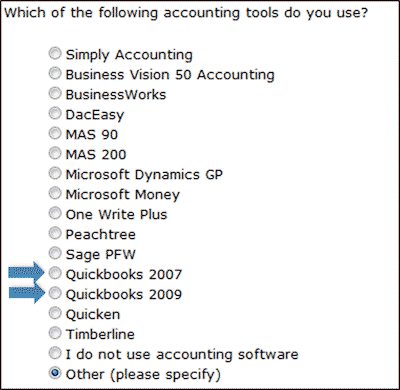

- Another aspect of reliability comes from the questionnaire design. This is a deep and complex subject, but for now let’s just keep it high-level. Make sure that the question text reflects the object of the question, that the options are exclusive, single thoughts, exhaustive (with don’t know, none of the above, or other/specify as appropriate).

Security

Considerations for survey security are similar to those for general IT security, with a couple of extra twists.

- Is your data secure on the server? Does your provider (or do you if you are hosting your own surveys) take appropriate precautions to make sure that the data is backed up properly and is guarded against being hacked into?

- Does the connection between the survey taker and the survey tool need to be protected? Most surveys use HTTP, but SSL capabilities are available for most survey tools.

- Are you taking the appropriate measures to minimize survey fraud (ballot stuffing?) What’s needed varies with the type of survey and invitation process, but can include cookies, personalized invitations, and password protection.

- Are you handling the data properly once exported from the survey tool? You need to be concerned with overall data in the same way that the survey tool vendor does. But you also need to look after personally identifiable information (PII) if you are capturing any. You may have PII from the customer list you used for invitations, or you may be asking for this information for a sweepstake. If the survey is for research purposes, ethical standards require that this private information is not misused. ESOMAR’s policy is simple – Market researchers shall never allow personal data they collect in a market research project to be used for any purpose other than market research. This typically means eliminating these fields from the file supplied to the client. If the project has a dual purpose, and the survey taker is offered the opportunity for follow up, this fact must be made clear.

Availability

No longer being involved in engineering, I’d have to scratch my head for the distinction between availability and reliability. But as this is about IT terms as they apply to surveys, let’s just consider making surveys available to the people you want to survey.

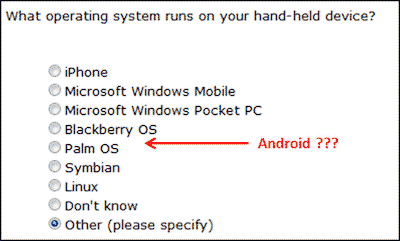

- Be careful about question types that may work well on one platform and not another, or may not be consistently understood by the audience. For example, drag and drop ranking questions look good and have a little extra zing, but are problematic on smart phones. Do you tell the survey taker to try again from a different platform (assuming your tool detects properly), or use a simpler question type? This issue also relates to accessibility (section 508 of the Rehabilitation Act, or the British Disability Discrimination Act). Can a screen reader deal with the question types?

- Regardless of question types, it is probably important to make sure that your survey is going to look reasonable on different devices and browsers. More and more surveys are being filled out on smartphones and iPads. Take care with fancier look and feel elements that aren’t interoperable across browsers. These days you probably don’t have to worry too much about people who don’t have JavaScript available or turned on, but Flash could still be an issue. For most of the surveys we run, Flash video isn’t needed, and in any case isn’t widely supported on mobile devices. HTML5 or other alternatives are becoming more commonly used.

- Instead of accessing web surveys from any compatible mobile devices, consider other approaches to surveying. I’m not a proponent of SMS surveys; they are too limited, need multiple transactions, and may cost the survey taker money. But downloaded surveys on iPad or smartphone have their place for situations where the survey taker isn’t connected to the internet.

I hope that these pointers are meaningful for the IT professional, even with the liberties I’ve taken. There is plenty of information As you can tell, just like in the IT world there are reasons to get help from a research professional. Let me know what you think!

Idiosyncratically,

Mike Pritchard